Gemini vs GPT-4: A Case Study in Artificial Intelligence and the Rise and Fall of Big Leaps in AI

As a result of recent concerns about the threat that developments from OpenAI and others may pose togoogle’s future, its developed and launched Gemini with striking speed Compared to previous projects at the company.

So, let’s just get to the important question, shall we? The GPT-4 is ready to go versus theGemini. This has been on everyone’s mind at one point or another. “We’ve done a very thorough analysis of the systems side by side, and the benchmarking,” Hassabis says. Google ran 32 well-established benchmarks comparing the two models, from broad overall tests like the Multi-task Language Understanding benchmark to one that compares two models’ ability to generate Python code. With a smile on his face, Hassabis stated that he believes we are substantially ahead on 30 out of the 32 benchmarks. “Some of them are very narrow. Some of them are larger.”

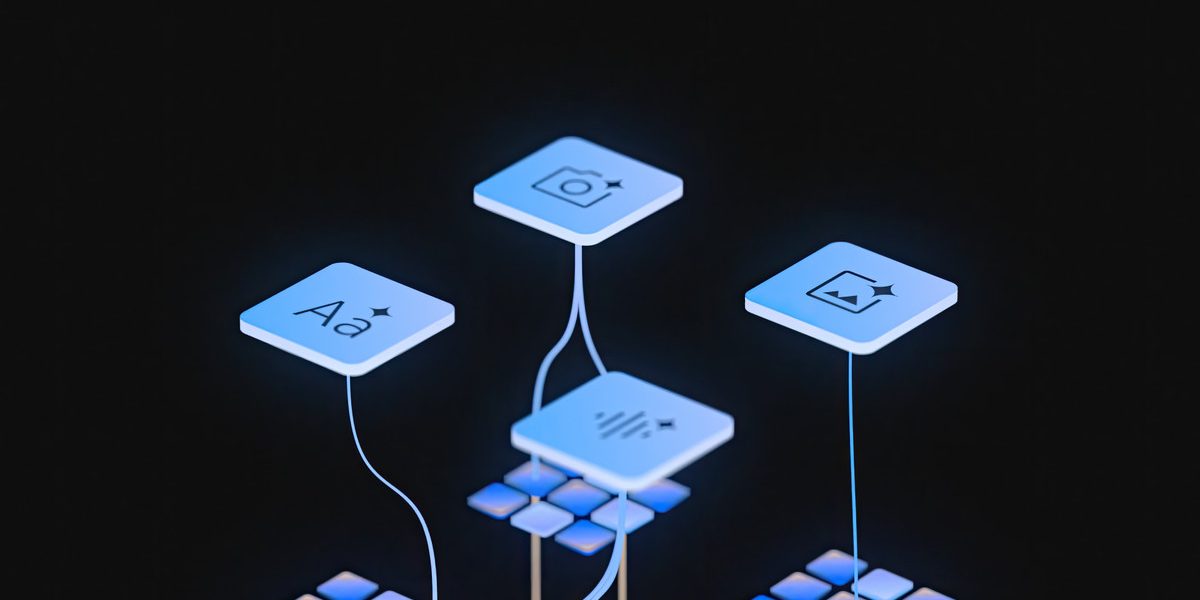

Right now, Gemini’s most basic models are text in and text out, but more powerful models like Gemini Ultra can work with images, video, and audio. Hassabis says that it will get more general than that. There are still things like action, and touch. Over time, he says, Gemini will get more senses, become more aware, and become more accurate and grounded in the process. “These models just sort of understand better about the world around them.” These models still hallucinate, of course, and they still have biases and other problems. But the more they know, Hassabis says, the better they’ll get.

Benchmarks are just benchmarks, though, and ultimately, the true test of Gemini’s capability will come from everyday users who want to use it to brainstorm ideas, look up information, write code, and much more. A new code generation system called AlphaCode 2 was used bygoogle in order to get more people to sign up for coding competition, and it appears to be a killer app. Users will notice an improvement in the model, claims Pichai.

Big leaps in artificial intelligence are talked about by Demis Hassabis. He became famous after a bot called AlphaGo taught itself to play the board game Go with skill and ingenuity.

GPT-4: Learning Multimodal Models in a Multimodal Environment for OpenAI Chatbots, a Brief Remark on Hassabis

“Until now, most models have sort of approximated multimodality by training separate modules and then stitching them together,” Hassabis says, in what appeared to be a veiled reference to OpenAI’s technology. It’s ok for some tasks, but you can’t have a deep and complex reasoning in multimodal space.

In September, OpenAI launched an upgrade to their chatbot that could take images and audio as input. OpenAI has not disclosed technical details about how GPT-4 does this or the technical basis of its multimodal capabilities.