What to Expect from Google I/O 2024: A Powerful, Interactive, and User-Favored Live Blog for Software, Hardware, and Services

Senior writers Lauren and Paresh Dave are at I/O in Mountain View, along with staff writer Reece Rogers. Will Knight and editorial director Michael Calore will provide live updates and commentary once we are underway, along with senior writer.

Google’s presentation will get started at 10 am Pacific, 1 pm Eastern. We’ll kick off the live blog about 30 minutes prior to that, so tune in right here at 9:30 am Pacific, 12:30 pm Eastern. This text will disappear and will be replaced by the feed of live updates, just like magic.

You may be asking, why are we publishing a live blog when the whole show is also being streamed on YouTube? The answer is, we love live blogs. The answer is that the I/O keynote is about two hours long. It’s a marketing presentation, and while we expect Google to break some news in this keynote, the content on your screen will of course be missing a lot of the necessary context around it—how Google’s AI-powered search offerings compare to the competition, how the new Gemini chatbot features stack up to OpenAI’s new ChatGPT-4o, or what Android’s new security features mean for users like you. That’s what the live blog is for, to distill and analyze the news coming from the stage and to give you the context that helps you become better informed. Also, live blogs are just fun for us to do. Let us have this, OK?

There is a keynote that contains valuable information for everyone who uses software, hardware and services at the Google I/O. So, you can expect lots of user-facing announcements, including new features coming to Android 15, Google search, and the Gemini suite of AI-powered tools. There will likely be a large amount of artificial intelligence news. Google has long been a leader in AI tech, but in the past two years it has ceded some ground to competitors like OpenAI, Anthropic, Perplexity, and Microsoft. This is the time forGoogle to let us know how it will push forward in this competitive space.

You can read our full rundown of what to expect from Google I/O 2024. We’ll be back at 9:30 Pacific for our commentary. The live event starts half an hour later.

Circle to Search: How Google’s Next Generation of Language Models Grows to Compute with What’s Happening on the Screen and Where It Might Fall

If you hold the homebutton and tap, you will be shown helpful contextual information related to what’s on the screen. Is it talking about a movie with a friend? Now on Tap could get you details about the title without having to leave the messaging app. Looking at a restaurant in Yelp? The phone could surface OpenTable recommendations with just a tap.

I was just out of college and the new features felt fantastic, it was possible to understand what was happening on the screen and predict the actions you would want to take. It was one of my favorite features. It was great in it’s own right but not the same.

It starts with Circle to Search, which is Google’s new way of approaching Search on mobile. Much like the experience of Now on Tap, Circle to Search—which the company debuted a few months ago—is more interactive than just typing into a search box. You circle what you want to look for on the screen. Burke says, “It’s a very visceral, fun, and modern way to search … It skews younger as well because it’s so fun to use.”

In an interview with WIRED ahead of the event, he said that they thought they could make a “dramatic amount” of improvements to search using the generative AI model that launched last year. “People’s time is valuable, right? They deal with things that are hard. If you have an opportunity with technology to help people get answers to their questions, to take more of the work out of it, why wouldn’t we want to go after that?”

Google is rolling its latest mainstream language model, Gemini 1.5 Pro, into the sidebar for Docs, Sheets, Slides, Drive, and Gmail. Next month, it will be turned into a general-purpose assistant within the workspace, that can fetch information from any and all content from your drive, no matter where you are. You will be able to do things for yourself, such as write emails that incorporate information from a document you are currently looking at or remind you later to respond to an email. All paid Gemini subscribers will get access to the features in the next month.

It’s as though Google took the index cards for the screenplay it’s been writing for the past 25 years and tossed them into the air to see where the cards might fall. Also: The screenplay was written by AI.

The changes to the search engine have been going on for a long time. A year ago the company carved out a section of its Search Labs that allowed users to try experimental new features. The big question since has been whether, or when, those features would become a permanent part of Google Search. The answer is, well, now.

Google says it has made a customized version of its Gemini AI model for these new Search features, though it declined to share any information about the size of this model, its speeds, or the guardrails it has put in place around the technology.

One example from WIRED is in response to a query about where to see the northern lights. Google will, instead of listing web pages, tell you in authoritative text that the best places to see the northern lights, aka the aurora borealis, are in the Arctic Circle in places with minimal light pollution. A link will be offered to NordicVisitor.com. There are other places to see the northern lights that include Russia and Canada.

Google Lens already lets you search for something based on images, but now Google’s taking things a step further with the ability to search with a video. You can ask a question in a video, and the machine will try to pull up relevant answers from the web.

Google is rolling out a new feature this summer that could be a boon for just about anyone with years — or even more than a decade — of photos to sift through. “Ask Photos” lets Gemini pore over your Google Photos library in response to your questions, and the feature goes beyond just pulling up pictures of dogs and cats. The CEO asked what his license plate number was. The response was followed by a picture so he could make sure that was correct.

Google’s Project Astra is a multimodal AI assistant that the company hopes will become a do-everything virtual assistant that can watch and understand what it sees through your device’s camera, remember where your things are, and do things for you. The company is aiming to be an honest-to-goodness agent and also do things on its own behalf, because many of the most impressive demos this year were powered by it.

A new generative Artificial Intelligence model can output high definition video with text, image, and video-based prompts. A variety of styles can be used for the production of a video, including aerial shots or time-lapses. Veo is offered to creators for use in some videos on YouTube, but the company is also trying to get Veo used in movies.

Voice Chatbots for Android and Windows Using Google Analytics, Tasks, and Keep: An Overview of Gemini Live Features and Future Trends

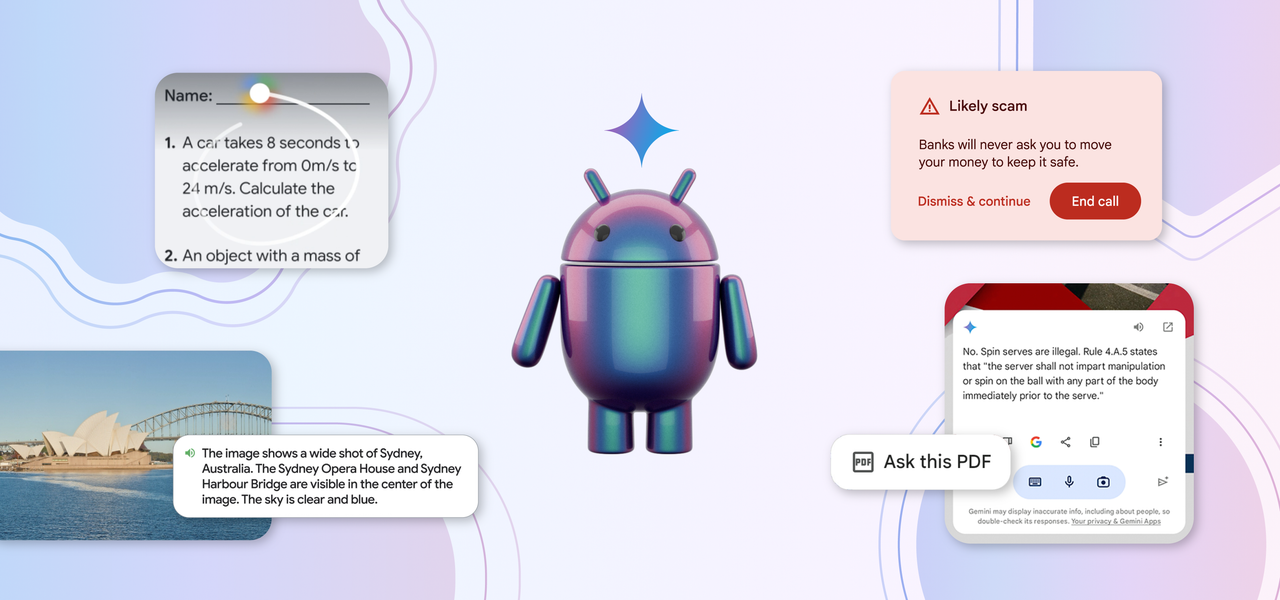

The new Gemini Live feature aims to make voice chats with Gemini feel more natural. The chatbot’s voice will be updated with some extra personality, and users will be able to interrupt it mid-sentence or ask it to watch through their smartphone camera and give information about what it sees in real time. Gemini is also getting new integrations that let it update or draw info from Google Calendar, Tasks, and Keep, using multimodal features to do so (like adding details from a flyer to your personal calendar).

If you’re on an Android phone or tablet, you can now circle a math problem on your screen and get help solving it. Google’s AI won’t solve the problem for you — so it won’t help students cheat on their homework — but it will break it down into steps that should make it easier to complete.

The phones will be able to look for red flags such as common conversations with people who are scamming them, and then pop up warnings like the one above. The company promises to offer more details on the feature later in the year.

Some users will be able to ask questions about videos on screen, and other users will be able to answer with an automatic caption. For pay Gemini Advanced users it can offer information and PDFs. There will be a lot of updates for Gemini in the next few months.

Google announced that it’s adding Gemini Nano, the lightweight version of its Gemini model, to Chrome on desktop. The built-in assistant will use on-device AI to help you generate text for social media posts, product reviews, and more from directly within Google Chrome.

The company says it can now detect artificial intelligence in videos made with its Veo video generator, and that it will also include watermarking into the content it creates.