Can Social Media Companies Do More? Artificial Intelligence and the Power of Genai: Challenges for Political Leaders and Regulatory Reform

Can social media firms do more? WhatsApp’s decentralized nature presents both opportunities and challenges for content distribution. It also complicates efforts to regulate and moderate content, as it allows the widespread dissemination of information. GenAI’s ability to create personalized and targeted content at scale might amplify existing biases and echo chambers. Without effective moderation mechanisms, platforms risk becoming breeding grounds for misinformation and propaganda.

Is there a way to level the playing field? Content production could be disrupted by Genai’s power dynamics. By democratizing content creation, it challenges the dominance of well-resourced political parties.

Rapid infrastructure development, including the modernization of train stations, is a key policy goal for the Bharatiya Janata Party (BJP), which seeks to portray India as a swiftly modernizing economy to both domestic and international audiences. Our data does not provide evidence of the creation or dissemination of images that are powered by artificial intelligence.

It’s crucial that mitigation mechanisms aren’t compromised so that they can target misinformation, that’s what it’s all about. We firmly caution against proposals that might undermine end-to-end encryption on platforms such as WhatsApp.

Standards can be kept up to date with the advancement of technology through collaboratively evolving new regulatory frameworks. The technology that can detect Artificial Intelligence at scales is crucial to the age of synthetic media.

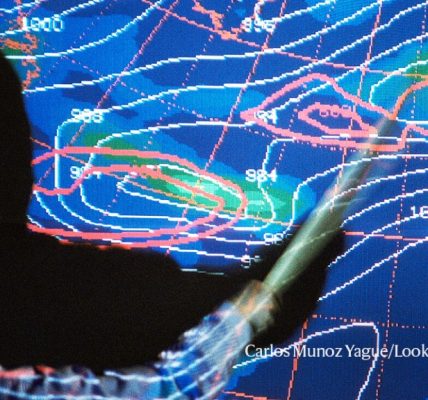

The ability of generative artificial intelligence to create seemingly authentic text is causing concern around the world. As many nations head to the polls this year, the potential of such content to fuel misinformation and manipulate political narratives is a troublesome addition to the list of challenges that election authorities face.

It is important to note that misinformation can easily be created through low-tech means, such as by misattributing an old, out-of-context image to a current event, or through the use of off-the-shelf photo-editing software. Thus, genAI might not change the nature of misinformation much.

Several high-profile examples of deepfakes have emerged over the past year. An audio recording of a Slovakian politician claiming to have rigged an upcoming election, a depiction of an explosion outside the US Department of Defense’s Pentagon building and images of Republican presidential candidate Donald Trump in the company of African American supporters are included in these.

We have been working to understand how the political parties are using messaging platforms to spread misinformation over the years. We have collected data from app users in India through a privacy-preserving, opt-in method.

We were especially interested in content that spread virally on WhatsApp — marked by the app as ‘forwarded many times’. Such a tag is placed on content that is forwarded through a chain that involves at least five hops from the original sender. Five hops could mean that the message has already been distributed to a very large number of users, although WhatsApp does not disclose the exact number.

GenAI Makes Digital Media Work for Humans: Understanding Misinformation, Implications for Elections, and Who Should Be Fooled?

Another theme evident in the genAI content seemed to be projections of a brand of Hindu supremacy. For example, we uncovered AI-generated videos showing muscular Hindu saints mouthing offensive statements against Muslims, often referencing historical grievances. AI was also used to create images glorifying Hindu deities and men with exaggerated physiques sporting Hindu symbols — continuing a long-standing and well-documented propaganda tactic of promoting Hindu dominance in India. The media depicting fabricated scenes from the current war in Gaza was subtly equating it with the attacks of Muslims against Hindu pilgrims in India. Current events are used to target minorities in the Indian social and political context.

Nonetheless, these are early days for the technology, and our first findings showcase genAI’s power to manufacture compelling, culturally resonant visuals and narratives, extending beyond what conventional content creators might achieve.

Infrastructure projects are a category of misleading content. Images of a futuristic train station in a city many Hindus believe to be the birthplace of the god Lord Ram spread widely. The pictures show a spotless station with depictions of Lord Ram on its walls. Ayodhya has been the site of religious tensions, particularly since Hindu nationalists demolished a mosque in December 1992 so that they could build a temple over its ruins.

The cases we document show the potential for personalization in future elections, despite our study suggesting that it is unlikely to single-handedly shape elections at the moment. Even if it resembles animation, it can still be very effective because of its emotional appeal to viewers who already have sympathetic beliefs. This emotional engagement, combined with the visual credibility that modern AI provides6 — blurring the line between animation and reality — could allow such content to be persuasive, although this requires further study.

The world has a shared interest in curbing the spread of misinformation and keeping public debate focused on issues of evidence and fact. Which curbing measures work and for whom must be tested — and first, independent researchers need access to the data that will allow society to make informed choices.

In a second research article6, Wajeeha Ahmad, a doctoral candidate at Stanford University in California, and her colleagues show that companies are ten times more likely to wind up advertising on misinformation sites if they advertise using exchanges. Although firms can follow up on where their ads are placed, most advertising decision makers underestimate their involvement with misinformation — and consumers are similarly unaware.

The Holocaust happened. The COVID-19 vaccine saved millions of lives. There was no widespread fraud in the US presidential election. These are three statements of indisputable fact. Indisputable — and yet, in some quarters of the Internet, hotly disputed.

They appear in a Comment article1 by cognitive scientist Ullrich Ecker at the University of Western Australia in Perth and his colleagues, one of series of articles in this issue of Nature dedicated to online misinformation. It’s a critical time to promote this subject. More and more people are online and this has helped spread false and misleading information more quickly, with consequences such as vaccine hesitancy and greater political polarization. In a year in which a number of countries hold elections, the sensitivities around misinformation are heightened.

This period included the attack on the US Capitol on 6 January 2021, after which Twitter deplatformed 70,000 users deemed to be trafficking misinformation. The move and drop in sharing of misinformation were shown by the authors. It is hard to know whether the ban modified user behaviour directly, or whether the violence at the Capitol had an indirect effect on the likelihood of users sharing narratives about the ‘stolen’ 2020 presidential election that fuelled it. Either way, the team writes, the circumstances constituted a natural experiment that shows how misinformation can be countered by social-media platforms enforcing their terms of use.

Experiments like this are likely to not be repeated. Lazer said he and his colleagues were lucky to be collecting data before the attack so they could extract it from the data they were given on the attack. Since its takeover by entrepreneur Elon Musk, the platform, now rebranded X, has not only reduced content moderation and enforcement, but also limited researchers’ access to its data.