The Nature Podcast – Discovering Hidden Cities in Central Asia with Drone-mounted LiDAR and an Artificial Intelligence-Generated Watermark

Never miss an episode. Subscribe to the Nature Podcast on

Apple Podcasts

,

Spotify

,

YouTube Music

or your favourite podcast app. The NaturePodcast has an RSS feed too.

What one researcher found after repeatedly scanning her own brain to see how it responded to birth-control pills, and how high-altitude tree planting could offer refuge to an imperilled butterfly species.

Governments are trying to find a solution to the proliferation of Artificial Intelligence-generated text. Problems include getting developers to commit to using watermarks and coordinating their approaches. Researchers at the Swiss Federal Institute of Technology in Switzerland have shown that watermarks are vulnerable to being removed and/or to being spoofed, the process of applying them to text to give the false impression that it is artificial intelligence.

Drone-mounted LiDAR scans reveal two remote cities buried high in the mountains of Central Asia — plus, how a digital watermark could help identify AI-generated text.

Watermarking Artificial Intelligence: A welcome step forward and a need for improved security in AI-model development: a case study of NIST 2022

In a welcome move, DeepMind has made the model and underlying code for SynthID-Text free for anyone to use. The work is an important step forwards, but the technique itself is in its infancy. It needs to grow up fast.

There is an urgent need for improved technological capabilities to combat the misuse of generative AI, and a need to understand the way people interact with these tools — how malicious actors use AI, whether users trust watermarking and what a trustworthy information environment looks like in the realm of generative AI. These are all questions that researchers need to study.

However, even if the technical hurdles can be overcome, watermarking will only be truly useful if it is acceptable to companies and users. Regulation will probably force companies to take action, but it’s not clear if users will trust watermarking and similar technologies.

The harm that Artificial Intelligence could cause is something that the authorities are trying to limit. It’s thought to be a linchpin technology. The National Institute of Standards and Technology (NIST) was instructed by the President of the United States to set stringent safety standards for Artificial Intelligence systems before they are made public. NIST is seeking public comments on its plans to reduce the risks of harm from AI, including the use of watermarking, which it says will need to be robust. Plans will be finalized soon, but there is no date for that yet.

The approach to watermarking LLM outputs is not new by the authors. There is also a version being tested by Openai, a company in San Francisco. There is limited information about the technology’s strengths and limitations. One of the most important contributions came in 2022, when Scott Aaronson, a computer scientist at the University of Texas at Austin, described, in a much-discussed talk, how watermarking can be achieved. Others have also made valuable contributions — among them John Kirchenbauer and his colleagues at the University of Maryland in College Park, who published a watermark-detection algorithm last year3.

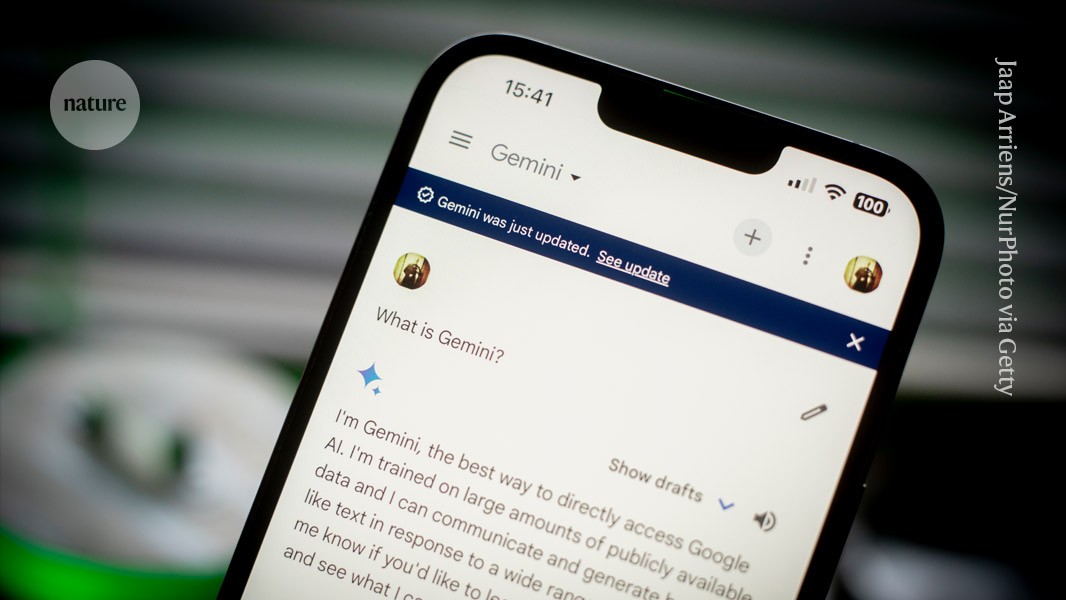

The tool has also been made open, so developers can apply their own such watermark to their models. “We would hope that other AI-model developers pick this up and integrate it with their own systems,” says Pushmeet Kohli, a computer scientist at DeepMind. Google is keeping its own key secret, so users won’t be able to use detection tools to spot Gemini-watermarked text.

Towards a Watermarking System for LLMs: An Algorithm Approach to Collaborative Learning in Text Analysis and Analysis

An LLM is a network of associations that are built up by training on billions of words. When given a string of text, the model assigns to each token in its vocabulary a probability of being next in the sentence. According to the rules of the sampling algorithm, it is their job to select from the distribution which token to use.

The sample is created by using a valid key and assigning random scores to each token. Candidate tokens are pulled from the distribution, in numbers proportional to their probability, and placed in a ‘tournament’. There is a method for comparing the scores of one-on-one knockouts with the highest value winning until there is only one token remaining which can be used in the text.

This elaborate system makes it easier to detect the watermark, which involves running the same cryptographic code on generated text to look for the high scores that are indicative of winning. It might also make it more difficult to remove.

If the watermark is helpful for a well-intentioned use, it will be worth it. “The guiding philosophy was that we want to build a tool that can be improved by the community,” he says.