OpenAI, DeepMind, and Anthropic: The OpenAI Team in Zurich, Switzerland: Open AI Recruits Three Senior Computer Vision Engineers

Zhai, Beyer, and Kolesnikov all live in Zurich, according to LinkedIn, which has become a relatively prominent tech hub in Europe. The city is home to ETH Zurich, a public research university with a globally renowned computer science department. Apple has also reportedly poached a number of AI experts from Google to work at “a secretive European laboratory in Zurich,” the Financial Times reported earlier this year.

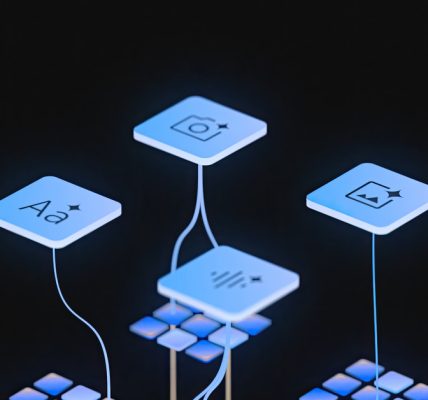

OpenAI announced today it has hired three senior computer vision and machine learning engineers from rival Google DeepMind, all of whom will work in a newly opened OpenAI office in Zurich, Switzerland. OpenAI executives told staff in an internal memo on Tuesday that Lucas Beyer, Alexander Kolesnikov, and Xiaohua Zhai will be joining the company to work on multimodal AI, artificial intelligence models capable of performing tasks in different mediums ranging from images to audio.

Over the past few months, a number of key figures at OpenAI have left the company, either to join direct competitors like DeepMind and Anthropic or launch their own ventures. Ilya Sutskever, an OpenAI cofounder and its former chief scientist, left to launch Safe Superintelligence, a startup focused on AI safety and existential risks. Mira Murati, Openai’s former chief technology officer, is raising money for a new Artificial Intelligence venture after leaving the company.

As they race to develop the most advanced models, OpenAI and its competitors are intensely competing to hire a limited pool of top researchers from around the world, often giving them annual compensation packages worth seven figures or more. It’s not uncommon for the most sought-After talent to hop between companies.

All three of the newly hired researchers already work closely together, according to Beyer’s personal website. While working at DeepMind, Beyer kept a close eye on Openai and its research, which he frequently posted about to his more than 70,000 followers on X.

OpenAI has long been at the forefront of multimodal AI and released the first version of its text-to-image platform Dall-E in 2021. The flagship chatbot was only able to interact with text inputs. The company later added voice and image features as multimodal functionality became an increasingly important part of its product line and AI research. (The latest version of Dall-E is available directly within ChatGPT.) Sora, a highly anticipated generative artificial intelligence video product, is yet to be widely available.

OpenAI, maker of ChatGPT and one of the most prominent artificial intelligence companies in the world, said today that it has entered a partnership with Anduril, a defense startup that makes missiles, drones, and software for the United States military. It is the latest in a series of announcements by tech companies in Silicon Valley that have warmed to the defense industry.

A former OpenAI employee speaking on the condition of anonymity says that the company will use their technology to assess drone threats more quickly and accurately, giving operators the information they want while staying out of harm’s way.

OpenAI altered its policy on the use of its AI for military applications earlier this year. A source who worked at the company at the time says that some employees were unhappy about the change but there were no open protests. The US military already uses some OpenAI technology, according to reporting by The Intercept.

Anduril is developing an advanced air defense system featuring a swarm of small, autonomous aircraft that work together on missions. These aircraft are controlled through an interface powered by a large language model, which interprets natural language commands and translates them into instructions that both human pilots and the drones can understand and execute. Until now, Anduril has been using open source language models for testing purposes.

Anduril is not currently known to be using advanced AI to control its autonomous systems or to allow them to make their own decisions. Such a move would be more risky, particularly given the unpredictability of today’s models.