Gen 1 and Gen 2: Architecture and St. Peter’s Basilica in Rome based on early examples of Perpendicular Revival

Gen 9: architecture. Being home to some of the world’s largest populations of black jackrabbits, white jackrabbits, blue jackrabbits, red jackrabbits, yellow jackrabbits, you get the idea.

Gen 5 had been translated into more than 100 languages, including English, French, German, Italian, Spanish, Portuguese, Dutch, Swedish, Norwegian, Polish, Slovak, Lithuanian,Estonia, Finnish, Austrian and Turkish.

Gen 1: architecture such as St. Peter’s Basilica in Rome or St. Peter’s Basilica in Buenos Aires. There is no proof that any of these buildings were built during Pope Innocent III’s reign but it is possible that they were built during the reign of Pope Innocent.

Gen 0 is the first. Revival architecture such as St. John’s Cathedral in London. One of the earliest examples of Perpendicular Revival architecture can be found in the Church of Our Lady of Guernsey, which dates from the late 19th century. There are two types of perpendicular churches : those.

Input: some started before 1360 — was typically accomplished by a master mason and a small team of itinerant masons, supplemented by local parish labourers, according to Poyntz Wright. But other authors reject this model, suggesting instead that leading architects designed the parish church towers based on early examples of Perpendicular.

Collapse of a Neural Network and the Effects of Data Preservation on Model Learning: The Case for a 5D N-Body Model in the LLM Supplementary Materials

The message is that we need to be very careful with what ends up in our training data. Things will always go wrong if things aren’t changed. The problem of model collapse is likely to be universal and can affect all models, even those with uncurated data and simple image generators.

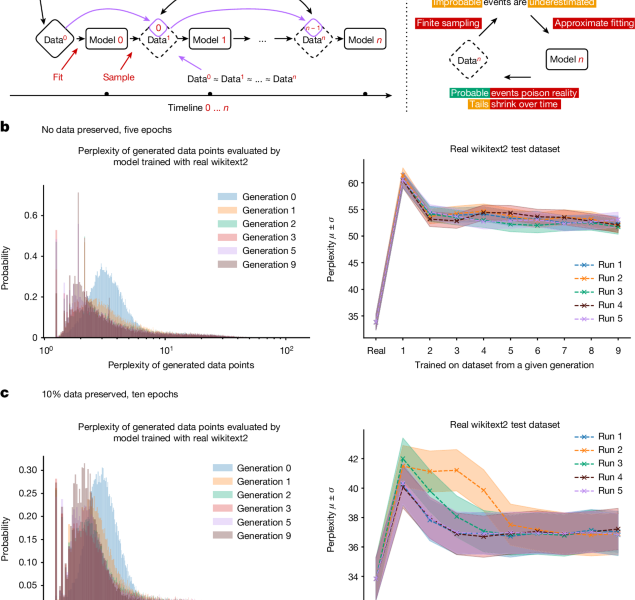

There were no original training data. Here the model is trained for five epochs starting on the original dataset but with no original data retained for subsequent runs. The performance of the original task is presented. 1b. It’s possible to adapt to an underlying task by training with generated data, but it loses some performance from 20 to 28 perplexity points.

A small amount of original training data are still preserved. In this case a random 10% of the original data points are sampled after every new generation of training. The overall original task performance is presented in Fig. 1c. We find that preservation of the original data allows for better model fine-tuning and leads to only minor degradation of performance.

Both training regimes lead to degraded performance in our models, yet we do find that learning with generated data is possible and models can successfully learn (some of) the underlying task. In particular, from Fig. 1 and their 3D versions in the Supplementary Materials, we see that model collapse occurs, as the density of samples with low perplexity begins to accumulate over the generations. Over the generations it’s probable that the sampled data will collapse to a Delta function.

The observed behavior is in line with the general intuition found in the section “Theoretical intuition”. In all experiments, a finite number of generations is used to perform generational learning, whereas claims of theoretical intuition are presented only in the limit of generations going to infinite. However, as seen from experiments on VAEs and GMMs in the Supplementary Materials, convergence to delta functions and specific rates of such convergence are highly related to the specifics of the problem considered, and complete collapse may or may not occur, even after a small number of steps. There is a potential for notable divergence from the original model even after a few generations in the Supplementary Materials.

To demonstrate model collapse, the researchers took a pre-trained LLM and fine-tuned it by training it using a dataset based on Wikipedia entries. They then asked the resulting model to generate its own Wikipedia-style articles. To train the next generation of the model, they started with the same pre-trained LLM, but fine-tuned it on the articles created by its predecessor. They judged the performance of each model by giving it an opening paragraph and asking it to predict the next few sentences, then comparing the output to that of the model trained on real data. The team expected to see errors crop up, says Shumaylov, but were surprised to see “things go wrong very quickly”, he says.

The study published in Nature1 found that even though models use the most information in their data sets they forget it as their outputs become more homogeneity. This is a concern when it comes to making AI models that represent all groups fairly, because low-probability events often relate to marginalized groups, says study co-author Ilia Shumailov, who worked on the project while at the University of Oxford, UK.

Synthetic data can be used in training. When Shumailov and his team fine-tuned each model on 10% real data, alongside synthetic data, collapse occurred more slowly. And model collapse has not yet been seen in the ‘wild’, says Matthias Gerstgrasser, an AI researcher at Stanford University in California. A study by Gerstgrasser’s team found that when synthetic data didn’t replace real data, but instead accumulated alongside them, catastrophic model collapse was unlikely3. It is unclear what happens when a model trains on data produced by a different AI, rather than its own.

Collapse in Language Models: Embedding Artificial Intelligence into an Internet-Scrabble Data Pool and Avoiding Its Implications

Language models work by building up associations between tokens — words or word parts — in huge swathes of text, often scraped from the Internet. The most probable next word is the one they spit out to generate text.

Collapse occurs because each model only has a small sample of data. This indicates that words that were not in the data are less likely to be reproduced and more likely to be re-enacted. Each model learns from the previous one, which in turn causes errors to get amplified in each iteration. Over time, the errors are stacking up and the model is only learning errors and nothing else.

The problem is analogous to inbreeding in a species, says Hany Farid, a computer scientist at the University of California, Berkeley. “If a species inbreeds with their own offspring and doesn’t diversify their gene pool, it can lead to a collapse of the species,” says Farid, whose work has demonstrated the same effect in image models, producing eerie distortions of reality2.

It would require a lot of cooperation by big-tech firms if developers were to find a way to keep AI-generated data separate from real data. Incentives may need to be found for creators to produce more content. Filtering is likely to become important, too — for example, humans could curate AI-generated text before it goes back into the data pool, says Kempe. She says that the phenomenon can be partly or fully avoided if you can properly dispose of it.