The word that is the Word of the Year is authentic

Merriam-Webster has named authentic as its word of the year 2020. It was driven by stories and discussions on artificial intelligence, celebrity culture, identity, and social media. This came after Google announced that it would build AI chips for its own AI-powered cars, a project that is expected to become a billion-dollar business within five years.

380,000 new materials were dreamed up by DeepMind

A US-based team has created 41 new ‘chemically bound and unusual’ materials using artificial intelligence (AI) in 17 days. The system named ‘Gno ME’ was used at the Department of Energy’s Laboratory for Biologically Bound Materials (LBNL) at the Lawrence Berkeley National Laboratory (LBNL) to predict the existence of materials. In 17 days, nine of the new materials were created after active learning improved the synthesis.

Everyone is quick in releasing artificial intelligence products

US-based artificial intelligence firm OpenAI has unveiled its new chatbot ‘ChatGPT’, which it claims is the first voice-commanded AI chatbot. Users will be able to use the chatbot to ask questions and have conversations, without ever leaving the platform. Earlier this month, OpenAI’s CEO Brian Altman was fired over “malfeasance” and “malfeasance related to the company’s financial, business, safety…privacy practice”.

Sam has been named as the chief executive of OpenAI

Elon Musk-led artificial intelligence (AI) startup OpenAI has said it will establish a new board of directors following the ouster of its CEO Sam Altman. The company said the new board will be independent of the board that removed Altman last week. It added that there will be “no changes” to its existing board, including Greg Brockman, who was replaced by Altman.

The mystery of the openai chaos

A board member of the US-based artificial intelligence (AI) startup OpenAI said, “We are the only company in the world which has a capped profit structure.” He added, “If you think… GPUs are going to take my job and your job and everyone’s jobs, it seems nice if that company wouldn’t make so much money.” Earlier, it was reported that OpenAI fired its CEO Sam Altman.

95 percent of Openai employees threat to follow Sam Altman

Former OpenAI CEO Tim Altman, along with former board member Steve Brockman, resigned from OpenAI on Friday hours after Altman was fired. The resignations came hours after Brockman was removed from his position as the company’s chair and replaced by David Shear. Open AI is a venture capital-backed company that focuses on developing artificial intelligence (AI) technology for real-world applications.

Sam the CEO of Openai Ousts

US-based artificial intelligence (AI) startup OpenAI’s Co-founder and CEO Greg Brockman is stepping down from his position as the chair of the board. The startup said that Brockman was not “consistently candid in his communications with the board”, hindering its ability to exercise its responsibilities. Mira Murati, the startup’s Chief Operating Officer, has been appointed as the interim CEO.

Who is OpenAI’s new interim CEO?

Sam Altman, who was CEO of AI startup OpenAI, announced on Friday that he’s leaving the company. “I loved my time at openai. He predicted artificial intelligence will be ‘the greatest leap forward of any technological revolution we’ve had so far’,” Altman said. He had joined OpenAI in 2013 from Twitter, where he was the VP and Co-founder.

Powerful computing efforts are being launched to boost research

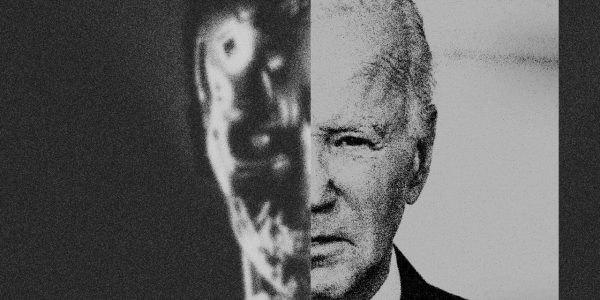

US President Joe Biden has signed an executive order to’safe, secure and trustworthy’ development of Artificial Intelligence (AI). The order further directs agencies that fund life science research to establish standards to protect against using AI to engineer dangerous biological Materials. It also called for four artificial intelligence research institutions in the US within the next 1.5 years.

Artists can use new tools to disrupt the systems of artificial intelligence

Singer Casey Stoney has said that artists using large artificial intelligence models shouldn’t have any recourse to get paid or credited. “The question for me is…is that truly analogous to…situation where I’m a very popular artist, people love to type my name into Stablefork, you get images…that look like my life’s work, and I get $0 for that?” Stoney said.